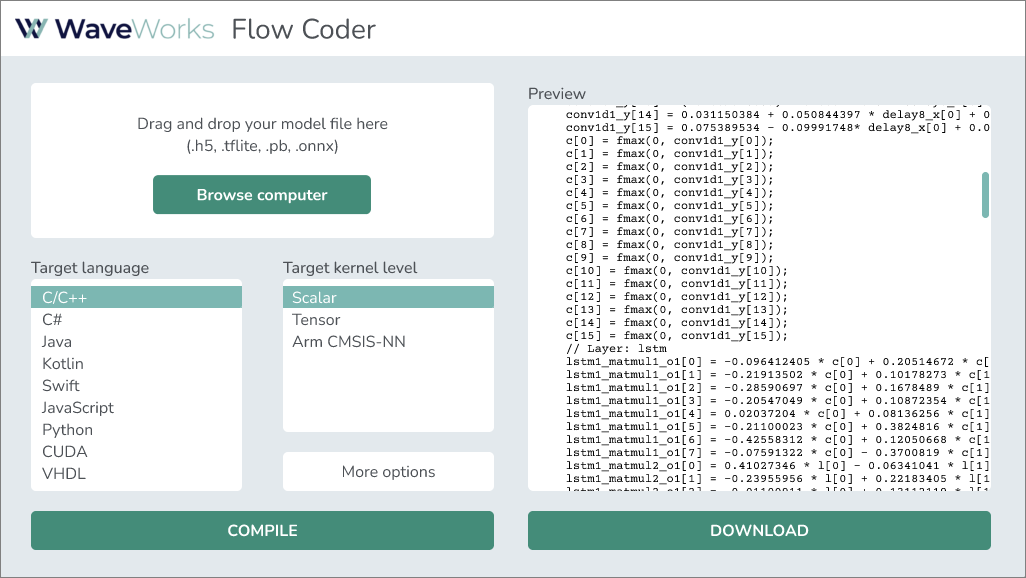

Flow Coder

Flow Coder takes the pain out of integrating a machine learning model into your software or firmware project. It converts trained machine learning models straight into dependency-free source code for many common programming languages, thereby eliminating the complexity and overhead of inference libraries in deployment projects. The compiler can be invoked online, using a desktop tool or as a single executable command-line tool, so it’s easy to integrate as a pre-build step in a build pipeline.

The full product is expected to be available Q4 2024.

Benefits

- Simplicity. Flow Coder can produce one single stand-alone function that consumes an input vector and produces an output vector. That’s it. No need for inference runtimes, libraries or other dependencies that complicate the build process.

- Efficiency. Inference with Flow Coder is expected to run faster and use less memory and power than with existing commonly used inference libraries for small models; partly because of the eliminated overhead, partly because all tensor operations are expanded to scalars and then further optimized. This is particularly true for heavily pruned and sparse models, where a latency reduction of 85-90% can be expected.

- Flexibility. Although scalar math code is the ultimate target for Flow Coder, it can also produce code targeting higher level inference libraries such as CMSIS-NN.

- Extensibility. Flow Coder uses the Python dialect FL to define the entire decomposition from high-level Keras model down to scalar math. This allows an expert user to easily define new layers or target new inference libraries. Moreover, users can add FL/Python code defining preprocessing and postprocessing to be included in the code generation.

- Readability. The produced code is fully human readable and gives the user immediate and complete insight in how every layer and every operation is computed.

Input formats

Initially, the compiler will support the following model formats:

- Keras .h5

- .tflite

- ONNX

The compiler supports all layers and operations including quantized models.

Target languages

The following languages can be generated:

- C/C++

- Java

- Kotlin

- Swift

- JavaScript

- C#

- Python

- CUDA

- VHDL